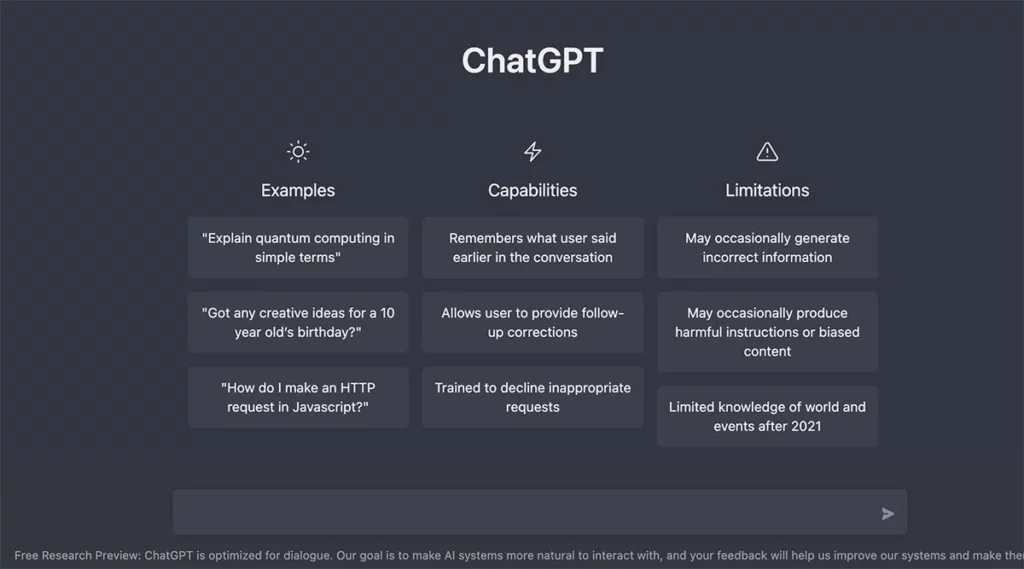

OpenAI has been consistently enhancing ChatGPT’s capabilities, focusing on its ability to answer questions, access information, and improve its underlying models. However, in its latest update, OpenAI is changing the way users can interact with ChatGPT. They are introducing a new version of the service that enables users to prompt the AI bot through text input and voice commands or image uploads. These new features will be made available to ChatGPT subscribers within the next two weeks, with a broader rollout planned shortly thereafter.

The voice command functionality is a familiar concept. Users can simply tap a button and speak their query aloud. ChatGPT then converts the spoken words into text, processes the request using its powerful language model, and delivers a spoken response. This interaction resembles conversations with popular virtual assistants like Alexa or Google Assistant. OpenAI expects that ChatGPT’s responses will be more accurate and informative, thanks to improvements in its underlying technology. It’s worth noting that many virtual assistants are increasingly relying on Large Language Models (LLMs), and OpenAI is at the forefront of this trend.

OpenAI leverages its Whisper model for the speech-to-text conversion, and they are also introducing a new text-to-speech model capable of generating “human-like audio from just text and a few seconds of sample speech.” Users will have the option to select from five different voices for ChatGPT. Additionally, OpenAI is exploring innovative applications for synthetic voices, such as translating podcasts into different languages while preserving the podcaster’s voice. However, these advancements also raise concerns about potential misuse, such as impersonation or fraud. To mitigate these risks, OpenAI plans to tightly control access to the technology, limiting its use to specific cases and partnerships.

The image search feature in the updated ChatGPT is reminiscent of Google Lens. Users can capture a photo of an object or scene, and ChatGPT will attempt to understand the context and respond accordingly. To further clarify their query, users can use the app’s drawing tool, speak, or type questions alongside the image. This approach aligns with ChatGPT’s conversational nature, allowing users to refine their queries interactively, rather than relying on traditional keyword searches. This concept closely resembles Google’s multimodal search efforts.

Nonetheless, image search comes with its own set of challenges, mainly when it involves people. OpenAI has intentionally limited ChatGPT’s ability to analyze and make direct statements about individuals, prioritizing accuracy and privacy. This means the futuristic idea of AI recognizing and identifying people from images remains distant.

OpenAI’s journey with ChatGPT illustrates its ongoing efforts to enhance its capabilities while also addressing potential problems and limitations. With these latest updates, the company has chosen to set boundaries on what its new models can do. However, as voice control and image search become more popular, and as ChatGPT moves closer to becoming a fully multimodal virtual assistant, maintaining these boundaries will become increasingly challenging. The future will likely bring more complex considerations and solutions for balancing AI capabilities with ethical and privacy concerns.