In a groundbreaking development, scientists have successfully integrated a multitude of tiny sensors, no larger than a postage stamp, into a compact space to decipher the intricate electrical signals associated with speech. This innovative ‘speech prosthetic‘ holds the promise of transforming communication for individuals facing speech challenges due to neurological conditions.

As we engage in verbal communication, our brains orchestrate a complex interplay of muscles, generating electrical signals that control speech muscles. This breakthrough technology involves the placement of a vast array of miniature sensors to read and predict the sounds individuals are attempting to make. Contrary to mind-reading, these sensors precisely detect the intended muscle movements in the lips, tongue, jaw, and larynx.

Co-senior author, and neuroscientist Gregory Cogan from Duke University, highlighted the potential impact on individuals with motor disorders, stating, “There are many patients who suffer from debilitating motor disorders, like ALS or locked-in syndrome, that can impair their ability to speak. But the current tools available to allow them to communicate are generally very slow and cumbersome.”

Commenting on the current state of technology, co-senior author and Duke University biomedical engineer Jonathan Viventi said, “We’re at the point where it’s still much slower than natural speech, but you can see the trajectory where you might be able to get there.”

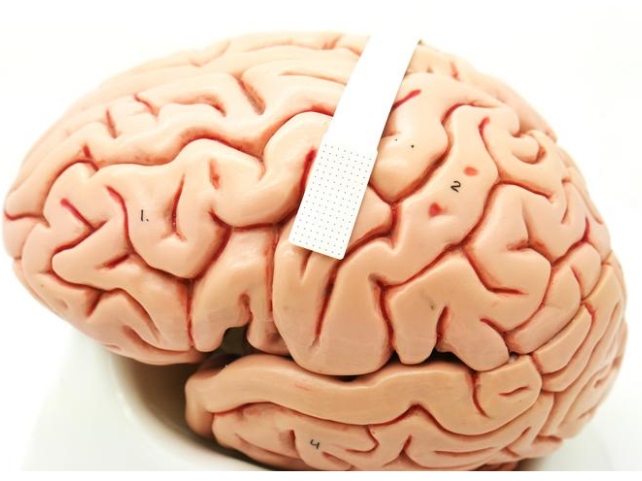

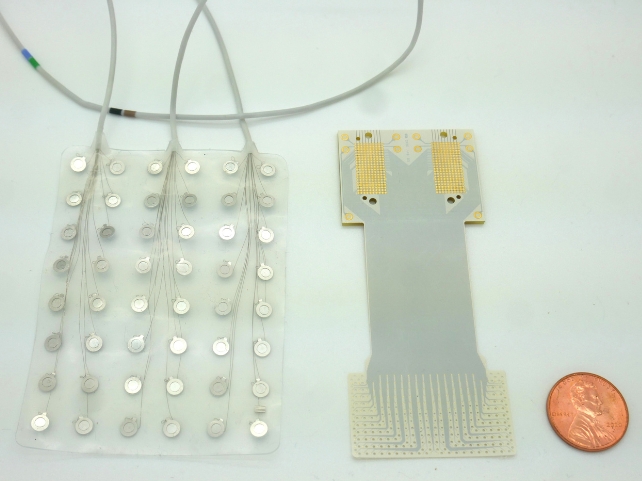

The researchers constructed an electrode array on medical-grade, ultrathin flexible plastic, featuring electrodes spaced less than two millimeters apart. This design enables the detection of specific signals, even from neurons nearby. To test the effectiveness of this micro-scale brain recording for speech decoding, the team temporarily implanted the device in four patients without speech impairment.

During the implantation, the team recorded brain activity in the speech motor cortex as patients repeated 52 meaningless words. The recordings revealed that phonemes elicited different patterns of signal firing, indicating dynamic real-time adjustments in speech production. Duke University biomedical engineer Suseendrakumar Duraivel utilized a machine learning algorithm to evaluate the recorded information, achieving a remarkable 84 per cent accuracy in predicting some sounds.

Despite the current limitations, the researchers are optimistic about refining the technology. A substantial grant from the National Institutes of Health will support further research and the development of wireless recording devices, allowing individuals greater mobility.

In the words of Cogan, “We’re now developing the same kind of recording devices, but without any wires. You’d be able to move around, and you wouldn’t have to be tied to an electrical outlet, which is really exciting.“

This promising start opens doors to a future where individuals with speech limitations can potentially communicate through thought, offering renewed hope for those facing neurological challenges.