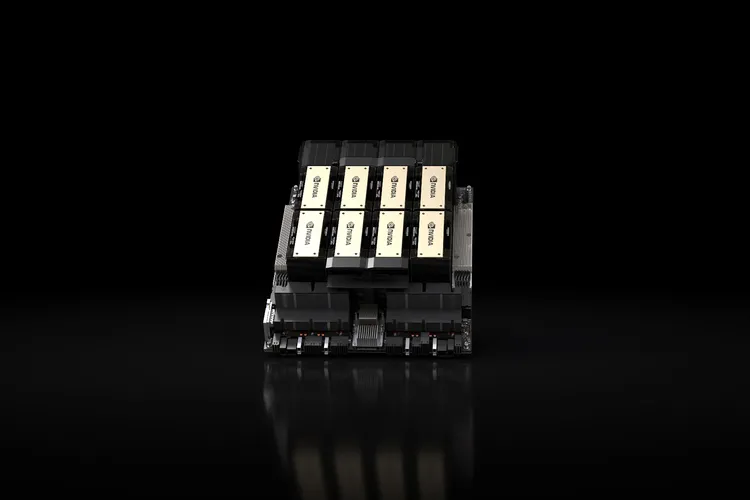

Nvidia is introducing a new high-end AI chip, the HGX H200, as an upgrade to the in-demand H100. The H200 boasts 1.4x more memory bandwidth and 1.8x more memory capacity, enhancing its capability for intensive generative AI tasks.

However, uncertainty surrounds the availability of the new chips, and Nvidia has not provided clear answers on potential supply constraints. The initial release of H200 chips is scheduled for the second quarter of 2024, with collaboration underway with global system manufacturers and cloud service providers.

The H200, featuring faster HBM3e memory, offers improved performance for generative AI models and high-performance computing applications. Despite similarities with the H100, the H200’s enhanced memory specifications mark a significant upgrade. Cloud providers like Amazon, Google, Microsoft, and Oracle are expected to integrate H200s into their systems without requiring adjustments.

While pricing details are not disclosed, previous-generation H100s are estimated to range from $25,000 to $40,000 each. Nvidia’s announcement comes amid a continued scramble for H100 chips, viewed as crucial for efficient data processing in AI applications. AI companies are actively seeking these chips, and the shortage has led to collaborations and even the use of H100s as collateral for loans.

Nvidia assures that the introduction of H200 will not impact H100 production, with plans to increase overall supply throughout the year. Despite efforts to triple H100 production in 2024, the demand remains high, fueled by the ongoing growth of generative AI applications.