Several staff researchers at OpenAI wrote a letter to the board of directors, cautioning about a potent artificial intelligence discovery that they claimed could pose a threat to humanity, according to two individuals familiar with the matter.

The unreported letter and AI algorithm were pivotal developments that preceded the board’s decision to remove Sam Altman, the face of generative AI, according to the same sources. Before Altman’s eventual return, over 700 employees had contemplated quitting and aligning with Microsoft, their primary backer, in solidarity with their ousted leader.

The letter was cited by sources as one factor in a broader list of grievances that led to Altman’s dismissal, including concerns about commercializing advances without a thorough understanding of their consequences. A review of the letter was not possible, and the individuals who wrote it did not respond to requests for comment.

OpenAI acknowledged the existence of a project called Q* and a letter to the board in an internal message to staff before the events of the weekend, according to an individual. The message, sent by long-time executive Mira Murati, informed staff about certain media stories without commenting on their accuracy.

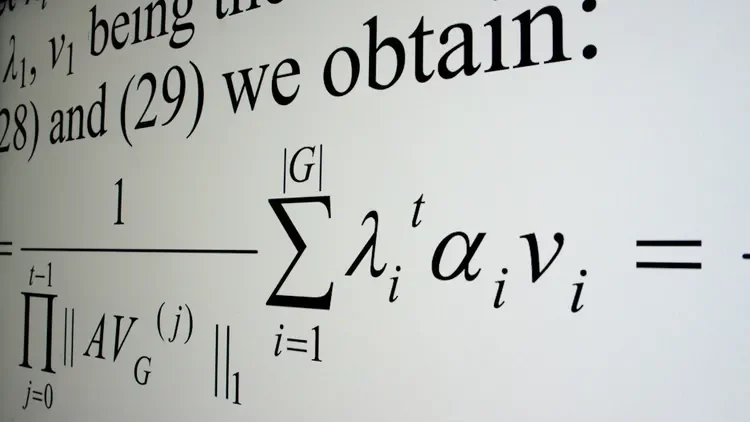

Some within OpenAI see Q* (pronounced Q-Star) as a potential breakthrough in the startup’s quest for artificial general intelligence (AGI). AGI, as defined by OpenAI, involves autonomous systems surpassing humans in most economically valuable tasks. The new model, benefiting from significant computing resources, demonstrated the ability to solve specific mathematical problems, albeit at the level of grade-school students.

Researchers view mathematics as a frontier in generative AI development. While current generative AI excels in tasks like writing and language translation by predicting the next word statistically, mastering mathematics, where there is a singular correct answer, implies greater reasoning capabilities akin to human intelligence. This could have applications in novel scientific research, according to AI researchers.

Unlike a calculator limited to specific operations, AGI has the capacity to generalize, learn, and comprehend. The letter to the board from researchers flagged both the prowess and potential danger of AI, although the specific safety concerns were not detailed. The potential risks associated with highly intelligent machines deciding that humanity’s destruction is in their interest have long been discussed among computer scientists.

Researchers also raised awareness about the work of an “AI scientist” team, confirmed by multiple sources. This team, formed by merging the “Code Gen” and “Math Gen” teams, aimed to optimize existing AI models to enhance their reasoning capabilities and eventually engage in scientific work.

Altman played a leading role in making ChatGPT one of the fastest-growing software applications, securing investments and computing resources from Microsoft to advance towards AGI. Apart from unveiling new tools in a recent demonstration, Altman hinted at major AI advances at a summit of world leaders in San Francisco a week before being dismissed by the board.

Four times now in the history of OpenAI, the most recent time was just in the last couple weeks, I’ve gotten to be in the room, when we sort of push the veil of ignorance back and the frontier of discovery forward, and getting to do that is the professional honor of a lifetime.

Sam Altman at the Asia-Pacific Economic Cooperation summit

A day later, the board fired Altman.