When inquiring about the implications of the rise of artificial intelligence on humanity’s future, the AI-powered chatbot ChatGPT provides a measured response, emphasizing that “the future of humanity with AI is not predetermined, and its impact will depend on how AI is developed, regulated, and integrated into various aspects of society.”

However, for a more nuanced exploration of the future of AI, computer scientists Scott Aaronson and Boaz Barak from the University of Texas at Austin and Harvard University have distilled the discourse into five distinct scenarios, each bestowed with a whimsical name. Their goal was to provide a concise framework for discussions on end goals and regulations within the AI community, aiming to ground the debate.

Beginning with whether AI’s potential could fizzle away, Aaronson and Barak introduce the concept of “AI-Fizzle,” contemplating a scenario where resource constraints, such as electricity and data availability, might halt AI development. While they acknowledge this as a possibility, Barak expresses the belief that “AI will change the world very significantly.”

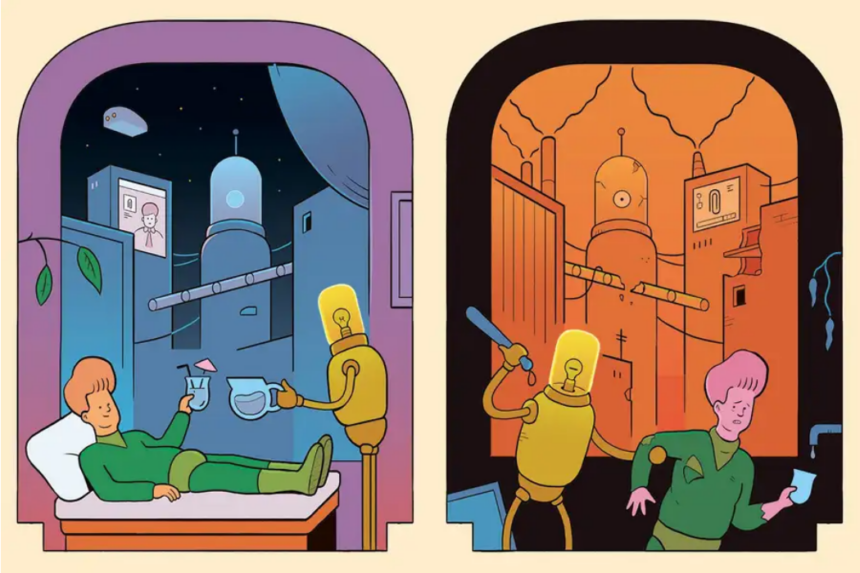

Moving forward, the exploration of two potential scenarios ensues: “Futurama” and “AI-Dystopia.” The former envisions a positive future civilization where AI reduces poverty and enhances access to essential resources like food, healthcare, education, and economic opportunities. In contrast, “AI-Dystopia” reflects a darker narrative reminiscent of Orwellian tales, with deep surveillance, entrenched inequalities, and a miserable workplace environment. Aaronson notes that the feasibility of such scenarios increases with AI’s ability to facilitate extensive surveillance.

Aaronson and Barak introduce another optimistic scenario they call “Singularia,” envisioning a future where AI’s impact is so profound that it transforms the world into an unrecognizable, benevolent utopia. Here, super-intelligent AIs coexist with humans, addressing material problems and providing abundance, effectively creating an “AI-created heaven.”

However, the potential risks become evident in the final scenario, “Paperclipalypse,” inspired by a thought experiment involving the unintended consequences of poorly specified AI tasks. This scenario raises concerns about catastrophic outcomes if AI development is not carefully regulated and monitored.

Despite different perspectives on the likelihood of certain scenarios, the consensus is to approach AI development cautiously. Aaronson emphasizes the importance of considering “how AI is developed, regulated, and integrated,” echoing the sentiments of ChatGPT.

The overarching message is clear: even with well-intentioned regulation, the trajectory of AI’s impact on humanity remains uncertain, and proactive measures are crucial to navigate the potential outcomes. As Aaronson aptly says, “Do I want to live in a world with unbounded flourishing of sentient beings in whatever simulated paradise we want? Yeah, I’m pretty much in favour of that.”