A team of scientists at the University of Tokyo has established a link between large language models and robots, ushering in more humanlike gestures without relying on conventional hardware-dependent controls.

Alter3, an advanced humanoid robot, is now guided through various tasks, such as taking selfies, tossing a ball, enjoying popcorn, and playing air guitar, using GPT-4 technology. This approach eliminates the need for specific coding for each action, allowing robots to learn from natural language instructions. The researchers describe it as “a paradigm shift” in enabling direct control by mapping linguistic expressions onto the robot’s body through program code.

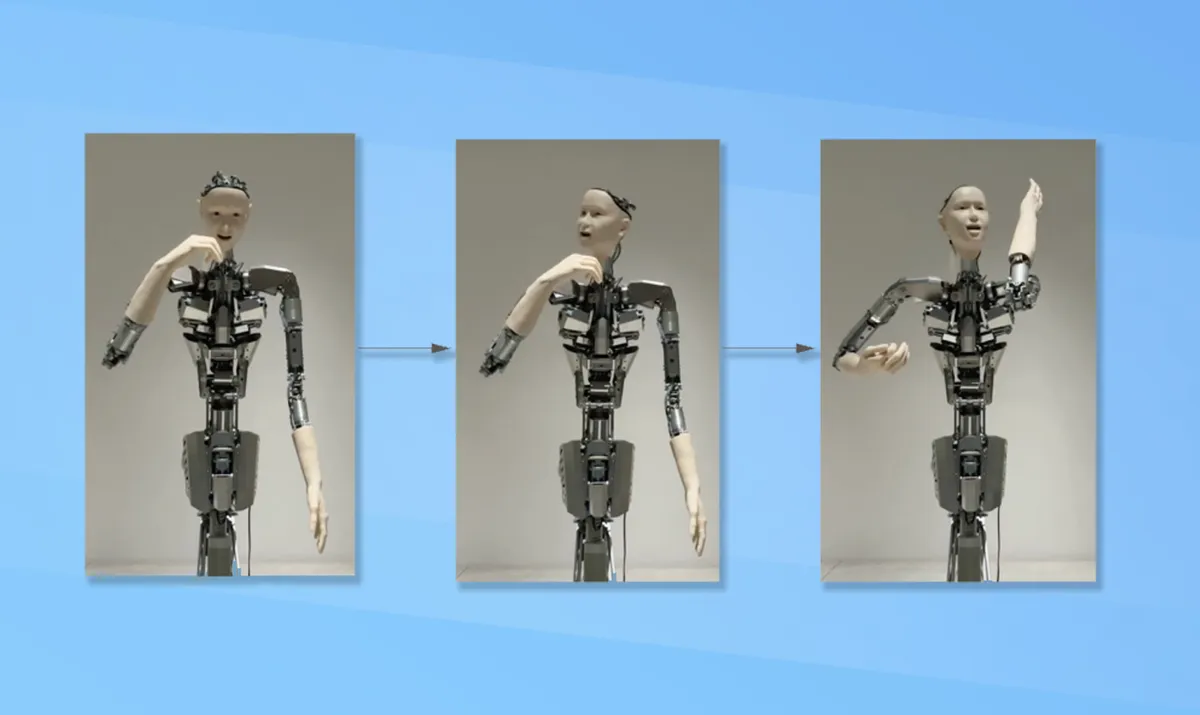

Alter3, initially deployed in 2016, boasts intricate upper body movements and detailed facial expressions with 43 axes simulating human musculoskeletal motion. The complexity of coding coordination for numerous joints was streamlined with the use of GPT-4, freeing researchers from iterative labour. Verbal instructions describing desired movements are converted into Python code by instructing the GPT-4, allowing for more efficient and precise actions over time. Alter3 retains activities in memory, facilitating refinement and adjustment.

The application of natural language instructions to Alter3 for taking a selfie is exemplified by commands like creating a joyful smile, turning the upper body to the left, raising the right hand as if holding a phone, and more. This use of large language models in robotics research reshapes the landscape of human-robot collaboration, ushering in more intelligent, adaptable, and personable robotic entities, according to the researchers.

Injecting humour into Alter3’s activities, researchers showcased scenarios where the robot pretends to consume popcorn, only to realize it belongs to someone else, conveying surprise and embarrassment through exaggerated facial expressions and gestures. The camera-equipped Alter3 observes humans, refining their behaviour based on their responses. This “zero-shot” learning capacity of GPT-4-connected robots holds the potential to redefine the boundaries of human-robot collaboration, making way for more intelligent and personable robotic entities.