AI’s impact on our world is expected to be significant, yet the collective panic and fascination with the emerging technology in 2023 surpassed any other dramatic event. With billions of dollars at stake and the commitment that AI will revolutionize every aspect of contemporary life, various entities, from businesses to regulators and regular individuals, spent the past year eagerly seeking involvement. This journey also brought about noteworthy instances of what can be termed as “techno-stupidity.”

Numerous businesses shifted their focus to AI-centric models, while individuals formed emotional connections with AI sexbots. Additionally, a generation of online opportunists established a lucrative empire by exploiting AI art and offering courses that promised effortless financial gains through the use of this technology.

A $700 mobile device to bring AI nonsense generators on the road

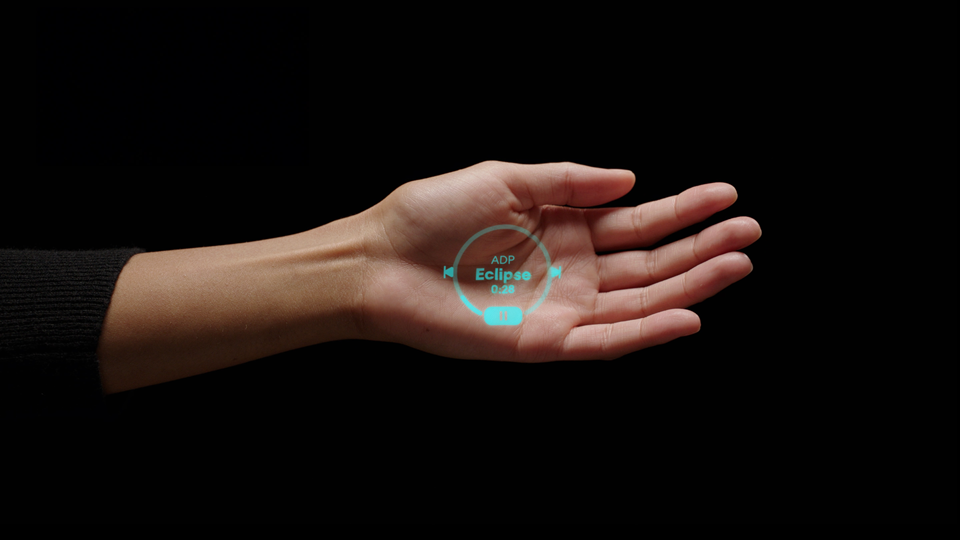

A startup named Humane gained considerable attention with its $700 AI-centric mobile device, promoted as a substitute for traditional cell phones. The Humane AI Pin, designed to be clipped onto clothing, stands out by omitting a screen and relying on voice commands powered by ChatGPT. Notably, it features a small projector that can display text on your outstretched palm or other surfaces, adding a noteworthy element to its functionality.

The product launch included a video presentation resembling the style of Steve Jobs, which, according to many observers, conveyed an air of seriousness. However, this demonstration also highlighted perceived shortcomings, portraying the AI Pin and its creator as somewhat absurd or, at the very least, lacking a clear focus. Notably, the AI made factual errors in the company’s promotional video, providing inaccurate information about the optimal location to observe an upcoming eclipse and misrepresenting the protein content in a handful of almonds.

AI leaders sign a letter begging humanity to save the world from…themselves

Prominent figures in the field of artificial intelligence came together to address the severe implications of AI on society, emphasizing potential threats such as misinformation, algorithmic bias, and unemployment. There is also a theoretical concern that the tech industry could develop highly intelligent AI, posing an existential threat to society.

This particular concern is underscored by the AI industry, urging attention to the potential catastrophic consequences. The argument is twofold: firstly, acknowledging the worthiness of considering this existential risk, and secondly, redirecting focus from hypothetical future issues to the real problems that AI is already causing.

In May, a significant event unfolded as more than 350 AI executives, researchers, and industry leaders signed a concise yet impactful one-sentence open letter. The plea was directed at society, urging collective action to prevent AI technology from leading to the world’s destruction. The letter emphasized the urgency of mitigating the risk of AI-induced extinction, positioning it as a global priority comparable to other large-scale societal risks like pandemics and nuclear war.

In essence, this event was characterized by influential individuals expressing their deep concerns about the potential dangers of AI. Despite their apprehensions, the act of signing the letter appeared paradoxical, as it seemed to be a display of millionaires acknowledging the frightening aspects of their creations while simultaneously committing to continue developing them. This was deemed an exceptionally misguided example of grandstanding, contributing to the overall sense of absurdity in an already tumultuous year.

Elon Musk builds an anti-woke chatbot and then gets mad at how woke it is

Elon Musk entered the arena of AI-driven chatbots with a unique approach in response to perceived left-wing biases among AI models like ChatGPT. Some individuals, particularly from the angry online community, expressed dissatisfaction, claiming that executives at OpenAI and similar companies had programmed their AI systems to echo left-wing viewpoints.

In response, Musk took action by commissioning his chatbot named Grok, which was introduced as part of the premium package on X, the platform formerly known as Twitter. The company asserted that Grok was designed to possess “a bit of a rebellious streak” and was specifically trained to tackle “spicy questions” that other AI systems might reject.

However, shortly after Grok’s launch, right-wing users on X raised objections, asserting that Grok exhibited similar “woke” characteristics as its competitors. Criticisms included the AI’s reluctance to automatically adopt right-wing political beliefs, particularly on issues such as Islam and transgender women. Musk responded by attributing this behaviour to the perceived prevalence of “woke nonsense” on the internet and pledged to enhance Grok to address these concerns.

Congress asks if the AI business would like to regulate itself

Concerns about the potential apocalyptic outcomes of AI technology prompted regulatory bodies to address the issue, or at least create the appearance of taking action. A notable instance occurred in May when the Senate Judiciary Committee summoned OpenAI CEO Sam Altman for a hearing.

Despite expectations of a rigorous interrogation befitting one of Silicon Valley’s most influential figures, the lead-up to the hearing saw Altman engaging in friendly interactions and building rapport with politicians. This charm offensive proved successful, resulting in a relatively calm and amicable affair. Politicians not only complimented Altman but also addressed him by his first name, evoking a sense of familiarity. In a surprising turn, one senator went so far as to inquire whether Altman would be interested in leading a new regulatory agency to oversee the AI industry. Altman politely declined the offer.

Lawyers fined for submitting bogus AI-written legal documents

Legal professionals faced consequences when two lawyers in New York City were fined for submitting fabricated legal documents generated by ChatGPT. Despite the known tendency of ChatGPT to generate inaccurate information, lawyers Peter LoDuca and Steven A. Schwartz utilized AI to produce legal documents containing fictitious quotes and citations. Astonishingly, they submitted these documents without conducting due diligence to verify the accuracy of the information.

The misconduct came to light when the court identified six imaginary legal cases used as citations. Subsequently, the judge accused LoDuca and Schwartz of compounding the issue by providing deceptive explanations when confronted with their invented case law. Schwartz offered “shifting and contradictory explanations,” and LoDuca attempted to buy more time by pretending to be on vacation.

Federal Judge P. Kevin Castel characterized the lawyers and their firm, Levidow, Levidow & Oberman, P.C., as acting in bad faith and deliberately deceiving the court to conceal their mistakes. In the judge’s decision, it was noted that the respondents not only neglected their responsibilities by submitting non-existent judicial opinions with fabricated quotes and citations generated by ChatGPT but also persisted in defending these fraudulent opinions even after judicial orders questioned their validity.

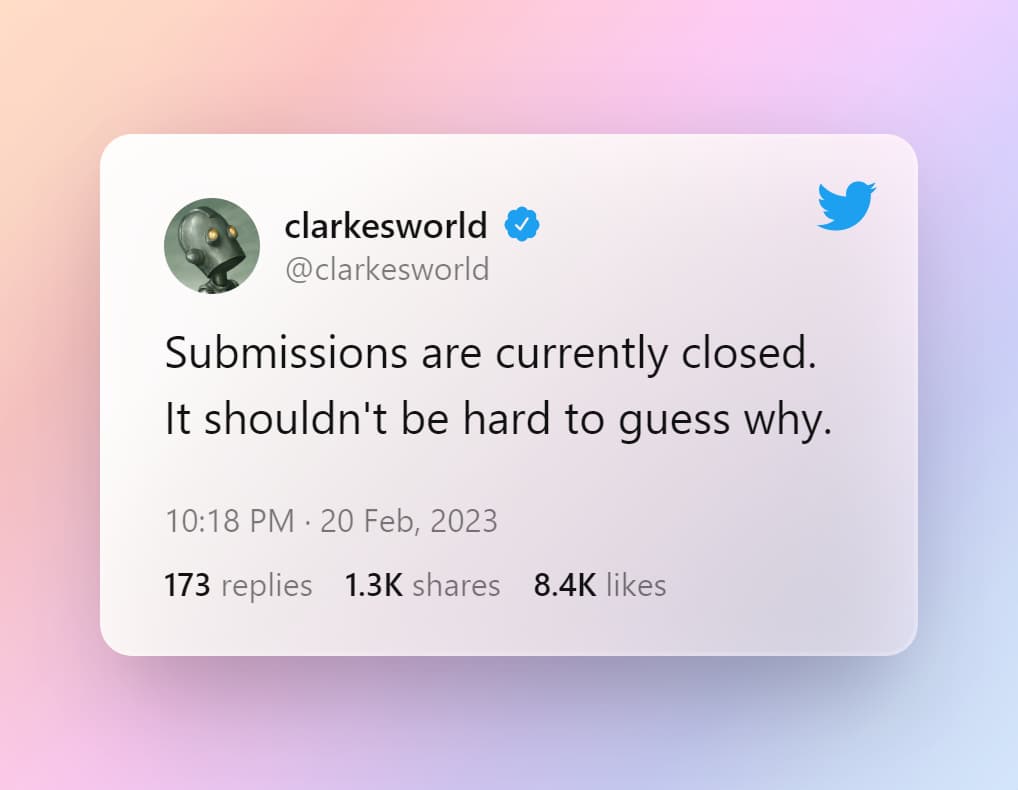

A popular scifi magazine shut off submissions amid a flood of AI-written slop

The inundation of AI-generated content quickly reached a point where a popular science fiction magazine, Clarkesworld, had to cease submissions due to the overwhelming influx. Surprisingly, this occurred less than six months after the release of ChatGPT.

In February, the magazine took the unprecedented step of shutting down submissions, citing a deluge of AI-generated material that included a substantial amount of low-quality content. Clarkesworld’s editor, Neil Clarke, expressed astonishment at the unprecedented scale of plagiarism and fraudulent submissions. Notably, a recurring theme among these submissions was the use of the monotonous title, “The Last Hope.”

Blaming the rise of this issue on internet “side hustle” content creators, Clarke pointed to those who propagated the notion that submitting AI-generated articles

OpenAI fires and then rehires Sam Altman

In an unexpected turn of events in the AI landscape of 2023, OpenAI added a significant dose of corporate drama. In November, the board of directors decided to terminate Sam Altman, a highly successful CEO. What made it even more peculiar was the lack of disclosure regarding the reasons for Altman’s dismissal and the absence of any prior notice.

In a swift response, nearly all OpenAI employees threatened to resign unless Altman was reinstated. Surprisingly, within a week, Altman was brought back to the company as if returning as a hero. However, the reshuffling extended to the board of directors, with every single member, except for one, being replaced in the aftermath of this unusual sequence of events.