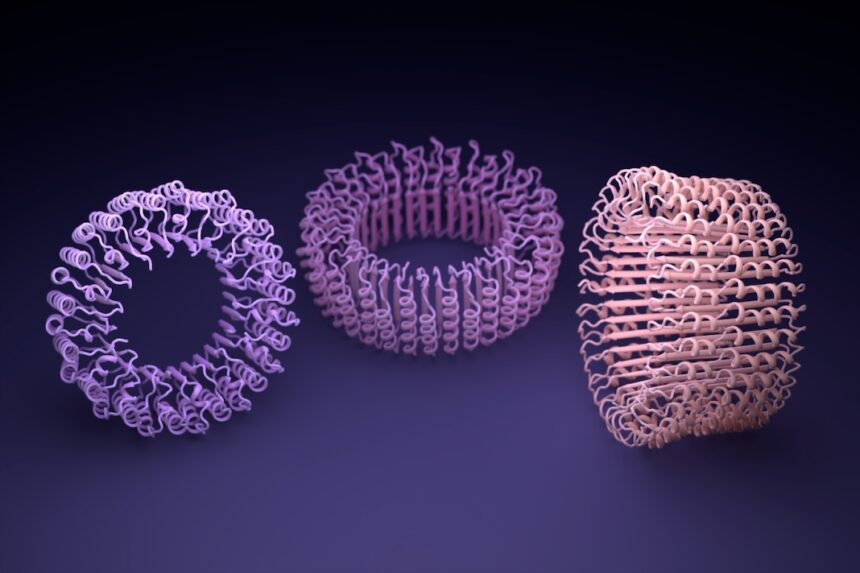

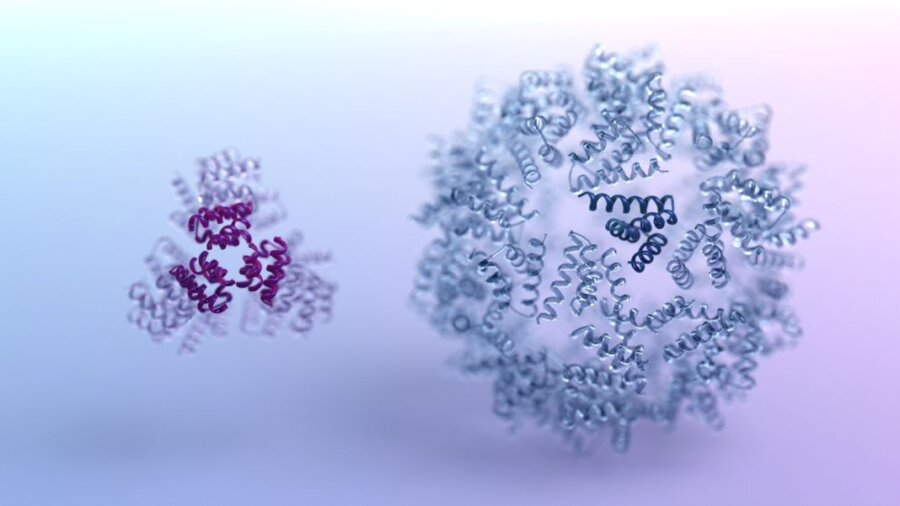

Two decades ago, the notion of engineering designer proteins seemed like a distant dream. Fast forward to today, and thanks to AI, custom proteins have become commonplace. These made-to-order proteins often possess unique shapes or components, granting them abilities previously unseen in nature. This transformative technology extends to diverse applications, from developing longer-lasting drugs and protein-based vaccines to creating greener biofuels and proteins capable of degrading plastics.

The realm of custom protein design heavily relies on deep learning techniques, with large language models like the AI powering OpenAI’s ChatGPT generating millions of structures beyond human imagination. Dr Neil King from the University of Washington highlighted the impact, stating, “It’s hugely empowering. Things that were impossible a year and a half ago—now you just do it.”

However, as the field rapidly progresses and gains traction in medicine and bioengineering, concerns are emerging about the potential nefarious uses of these technologies. In a recent essay in Science, experts in the field, Dr David Baker and Dr George Church emphasize the importance of establishing biosecurity measures for designer proteins, paralleling ongoing conversations about AI safety.

The essay suggests incorporating barcodes into the genetic sequence of synthetic proteins. This approach, akin to an audit trail, enables easy tracing back to the origin of any designer protein, offering a safeguard against potential threats.

The convergence of designer proteins and AI necessitates biosecurity policies. The essay’s authors underscore the need to consider and address biosecurity risks and policies to prevent the misuse of custom proteins.

Designer proteins are intrinsically linked to AI, with the technology playing a crucial role in their rapid design. Multiple strategies, including structure-based AI and large language models like ChatGPT, have accelerated the design process. These AI-driven programs raise concerns about potential alterations to the building blocks of life, prompting the need for stringent regulations.

While AI safety regulations are in progress globally, synthetic proteins are not explicitly addressed. However, new AI legislation is underway, with the United Nations’ advisory body on AI expected to release guidelines later this year. The authors argue for a global effort within the field to self-regulate and propose measures such as documenting each new protein’s underlying DNA to ensure safety.

At the 2023 AI Safety Summit, experts emphasized the importance of documenting synthetic DNA sequences in a global database, making it easier to identify potential risks. The authors stress the necessity of balancing data sharing and protection of trade secrets, suggesting that adding barcodes to genetic sequences during synthesis could enhance security and prevent the recreation of potentially harmful products.

The road to ensuring the safety of designer proteins involves global collaboration among scientists, research institutions, and governments. Past successes in establishing safety and sharing guidelines in controversial fields, such as genetic engineering and AI, serve as examples of how international cooperation can advance cutting-edge research safely and equitably.