While it might seem that Apple is trailing behind in the AI race, especially after the widespread adoption of ChatGPT in late 2022, it appears that Apple has been strategically patient, possibly awaiting the opportune moment to unveil its advancements. Recent rumours and reports suggest that Apple has been in talks with both OpenAI and Google to bolster its AI capabilities and has also been developing its proprietary AI model named Ajax.

Analyzing Apple’s published AI research provides insight into the company’s potential AI strategies. Though predicting exact product outcomes from research is challenging, there’s a strong indication that Apple’s AI might be showcased at the upcoming WWDC in June.

One promising development hints at a significant upgrade for Siri. Apple’s research aims to optimize large language models (LLMs) for speed and widespread availability, even within the constrained hardware of a smartphone. A recent study explored storing data typically held in RAM on the SSD, potentially accelerating LLM operations significantly on mobile devices.

Another research endeavour, EELBERT, successfully compressed a large model like Google’s BERT to a fraction of its size with minimal loss in effectiveness, albeit with some latency trade-offs. This aligns with Apple’s broader objective of balancing model size with efficiency and utility.

There’s a clear emphasis on enhancing Siri. Some Apple researchers are investigating ways to activate Siri without a wake word, aiming for more intuitive user interactions, while others focus on improving Siri’s comprehension of rare words and responses to ambiguous queries.

Beyond virtual assistants, Apple’s AI research spans various applications. In health, AI could analyze the extensive biometric data from Apple devices to provide actionable health insights. Creative tools are also a focus, with developments like Keyframer enabling users to iteratively refine AI-generated designs, and MGIE, which edits images based on descriptive input.

Apple is also exploring AI’s potential in enhancing user experiences with music, possibly offering tools to separate vocals from instruments, which could revolutionize interactions with music on platforms like Apple Music.

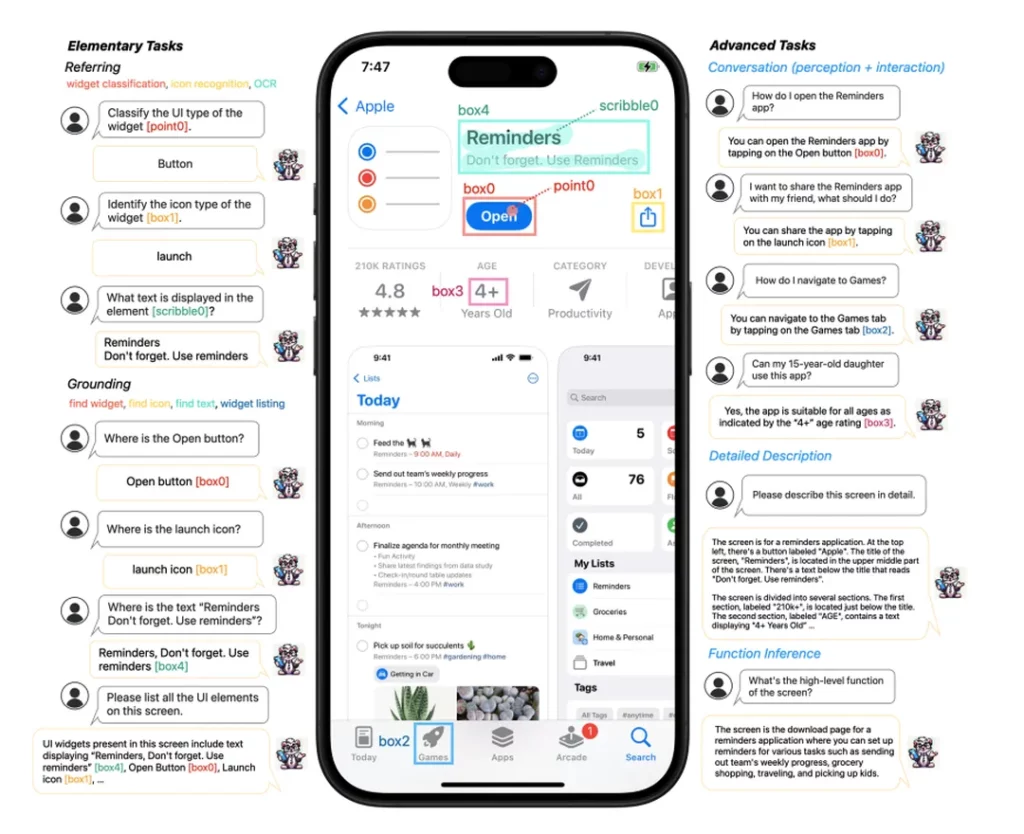

Perhaps the most ambitious project is Ferret, a multi-modal LLM that understands both textual and visual inputs, potentially transforming how devices interpret the world around them. This could significantly enhance app navigation, accessibility, and even fundamental device usage.

While still in the realm of research, these developments have profound implications. Significant announcements are anticipated at the upcoming WWDC. Apple CEO Tim Cook has hinted at major AI developments, suggesting that Apple is not only catching up in the AI race but might also be poised to redefine the smartphone experience, potentially making Siri an indispensable tool.