In the realm of artificial intelligence at Google, a new era is dawning, heralded by CEO Sundar Pichai as the “Gemini era.” Gemini represents Google’s latest stride in the field of large language models (LLMs), first introduced by Pichai at the I/O developer conference in June and now unveiled to the public. Pichai envisions Gemini as a pivotal advancement that will significantly impact various Google products, emphasizing the seamless integration of improved underlying technology across the company’s diverse offerings.

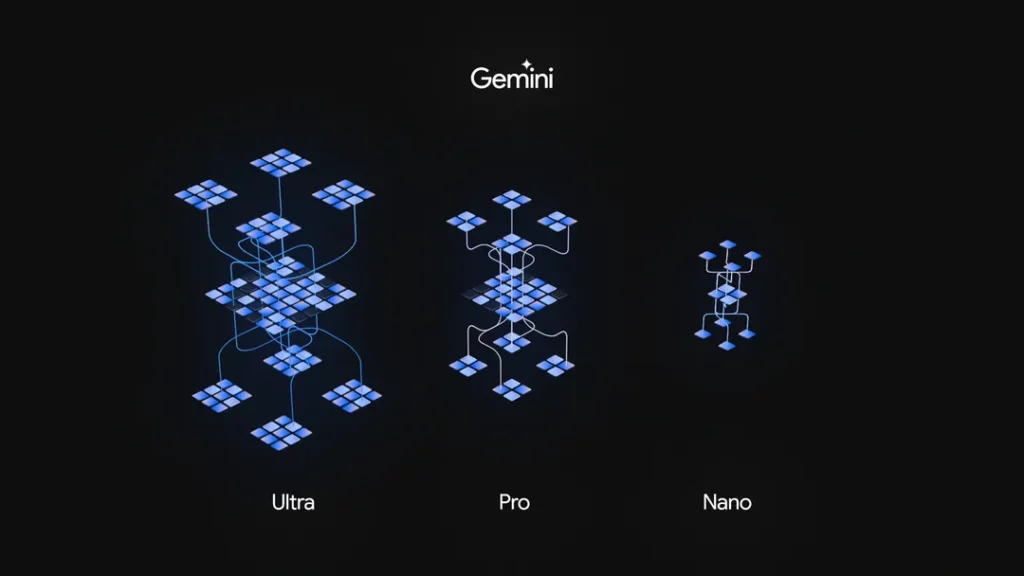

Gemini transcends a singular AI model; it comprises distinct versions tailored to different needs. Gemini Nano is a lightweight variant designed for native and offline use on Android devices. Gemini Pro, a more robust version set to power various Google AI services, serves as the foundation for Bard. Lastly, Gemini Ultra, the most potent LLM by Google, is primarily targeted at data centres and enterprise applications.

The deployment of Gemini unfolds in stages: Bard is now fueled by Gemini Pro, offering new features to Pixel 8 Pro users through Gemini Nano, with Gemini Ultra slated for release next year. Starting December 13th, developers and enterprise clients can access Gemini Pro through Google Generative AI Studio or Vertex AI in Google Cloud. Although currently available only in English, Pichai anticipates Gemini’s integration into Google’s global ecosystem, encompassing the search engine, ad products, Chrome browser, and more.

The unveiling of Gemini is strategically timed, marking Google’s response to the dominance of OpenAI’s ChatGPT, which took the AI landscape by storm a year ago. The showdown between OpenAI’s GPT-4 and Google’s Gemini is imminent, with both companies keenly aware of the stakes. Demis Hassabis, CEO of Google DeepMind, reveals that extensive benchmarking comparing the two models favoured Gemini in 30 out of 32 categories, particularly excelling in comprehending and interacting with video and audio—a deliberate focus in Gemini’s development.

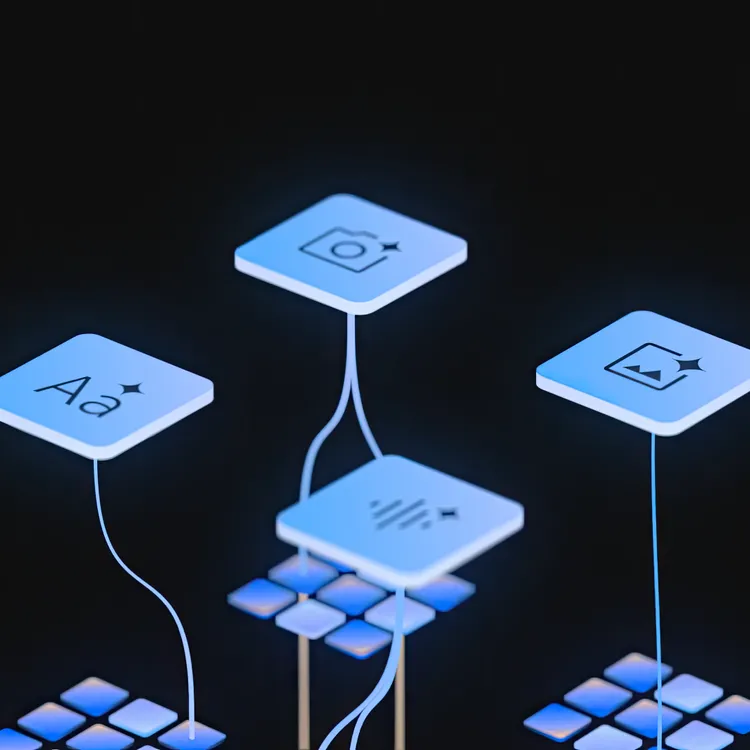

Gemini’s prowess extends beyond text, incorporating multimodal capabilities for images, video, and audio. Google’s approach involves building a single multisensory model rather than training separate models for different inputs. The ultimate vision for Gemini includes expanding its awareness and accuracy by incorporating additional senses and refining its understanding of the world.

While benchmarks provide insights, the true measure of Gemini’s capabilities will emerge from everyday users employing it for diverse tasks. Google envisions coding as a potent application for Gemini, introducing a new code-generating system called AlphaCode 2, surpassing 85 per cent of coding competition participants.

Efficiency is a key highlight, with Gemini proving faster and more cost-effective than its predecessors, trained on Google’s Tensor Processing Units (TPUs). Alongside the model, Google introduces the TPU v5p, a new version of its TPU system tailored for large-scale model training and operation in data centres.

Pichai and Hassabis view the launch of Gemini as a transformative milestone and a pivotal step in Google’s AI journey. Recognizing the race toward artificial general intelligence (AGI), they emphasize a cautious yet optimistic approach to the evolving AI landscape. Google underscores its commitment to safety and responsibility, subjecting Gemini to rigorous internal and external testing. Gemini Ultra’s gradual release reflects Google’s meticulous approach, akin to a controlled beta, ensuring a secure exploration of the model’s capabilities.

For Pichai, who envisions AI’s transformative potential as surpassing that of fire or electricity, Gemini represents a monumental beginning, potentially surpassing the web’s impact on Google’s trajectory, marking the advent of something even more significant.